Named Entity Recognition

Exploring different NLP models for Named Entity Recognition.

Created Using: Python TensorFlow Keras PyTorch Flask ReactJS

The code for this project is available at: github.com/HitanshShah/named-entity-recognition. Reach out to me via email if you have any queries related to this project.

Named Entities refer to the key subjects of a piece of text, such as names, locations, organizations, events, themes, topics, and so on. Named Entity Recognition (NER) is a Natural Language Processing (NLP) method that extracts this information from text. NER is regarded as a basis for information extraction and can be applied to more complex data processing tasks like sentiment evaluation and modeling of topics.

Models for Named Entity Recognition:

- Conditional Random Field (CRF): CRFs enabled the modeling of dependencies between sequential labels, allowing for accurate identification of named entities in text data. By leveraging CRFs, the system achieved superior performance in identifying entities such as persons, organizations, and locations within unstructured text.

- BERT + CRF: BERT (Bidirectional Encoder Representations from Transformers) is a language model developed by Google to solve NLP tasks like sentiment analysis, NER, question answering using deep learning constructs, and so on. To enhance NER accuracy, we integrated BERT with a Conditional Random Field (CRF) to consider neighboring decisions. This approach combined BERT's contextual understanding with CRF's structured predictions, achieving superior performance in identifying named entities within text data.

- BiLSTM + CRF: BiLSTM (Bidirectional Long Short-Term Memory) processes input sequences bidirectionally, capturing past and future information effectively for variable-length sentences. CRF addresses the limitation of BiLSTM in capturing label dependencies, resulting in improved performance for NER. This approach utilizes pre-trained word embeddings alongside character-level embeddings generated by a CNN, feeding into BiLSTM for sequence processing. Furthermore, enhancements with ELMo embeddings, derived from a deep bidirectional language model, combined with CRF, provide robust label dependency modeling during inference.

- Flair (FLERT Approach): FLAIR, developed by Zalando Research, offers a PyTorch-based NLP framework. FLERT, a component within FLAIR, enhances Named Entity Recognition (NER) by leveraging entity retrieval and typing. It preprocesses text using a combination of pre-trained embeddings like contextual string embeddings (CSE), GloVe, and FastText, feeding them into a linear chain Conditional Random Field (CRF) for prediction. FLERT also supports fine-tuning embeddings for domain-specific improvements.

- Automated Concatenation of Embeddings (ACE): ACE is an approach to automate the process of finding better concatenations of embeddings for structured prediction tasks. The ACE approach involves defining a search space of possible combinations of embeddings, training a controller to select the best combination for a given task, and evaluating the performance of different combinations on a validation set. Overall, the ACE approach provides an automated way to search for better embedding concatenations for structured prediction tasks.

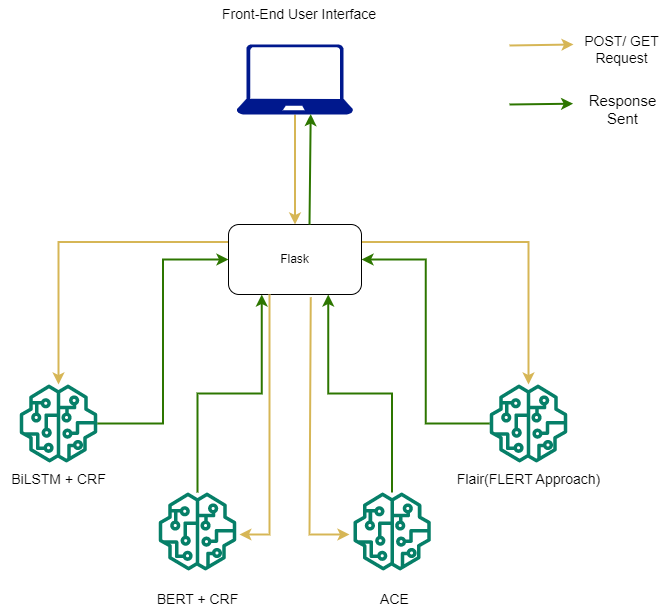

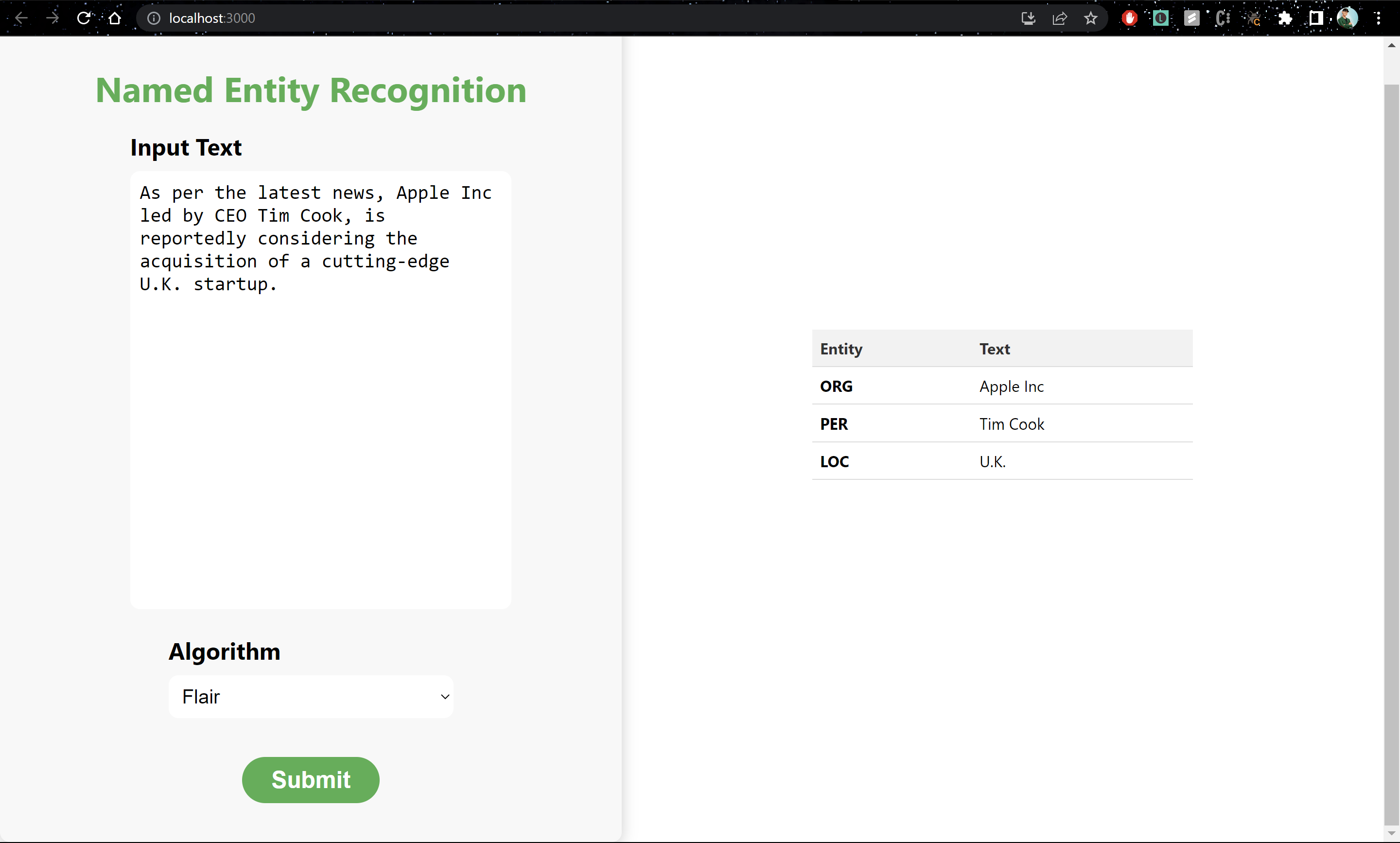

We trained and evaluated all of these 4 models on the CoNLL-2003 dataset, which was extracted from Reuters Corpus. This dataset contains NER tags for 4 categories: Person, Location, Organization and Miscellaneous. After training the above mentioned models, we created Flask APIs for all of them and created a user-interface using ReactJS that would allow users to enter some text and choose a model for performing Named Entity Recognition.

NER results for each of the models

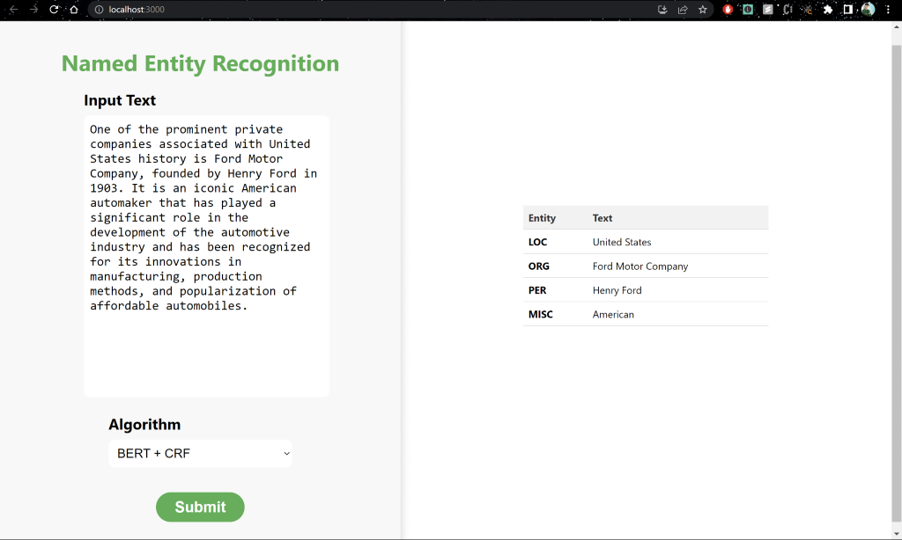

BERT + CRF

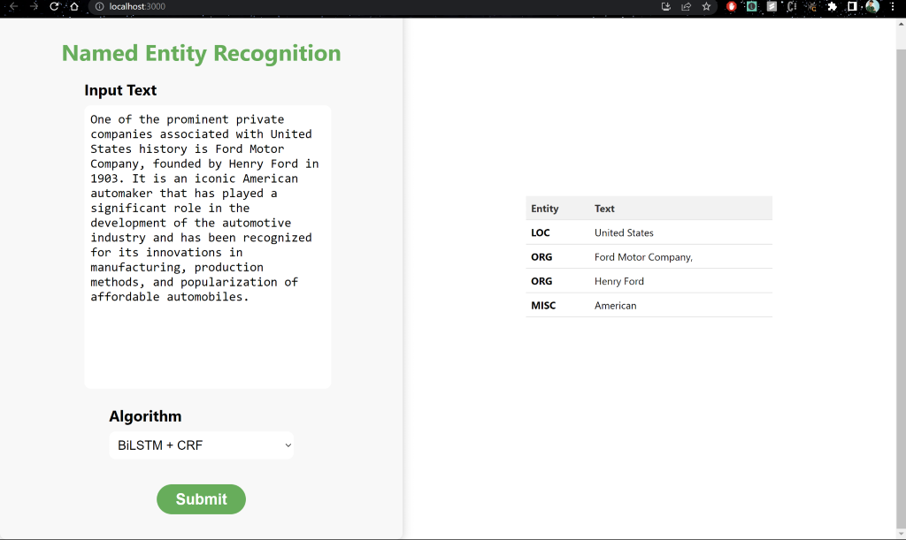

BiLSTM + CRF

Flair

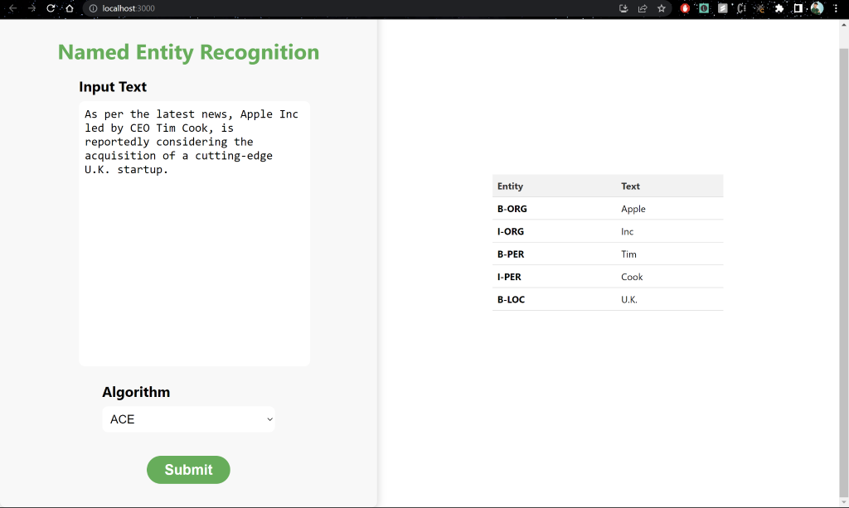

Automated Concatenation of Encodings (ACE)